How Accurate is AI-Generated Market Assessment?

Try TrafficZoom’s AADT metrics today with a free trial

Get instant access nowThe rise of artificial intelligence (AI), particularly natural language learning models (LLM) like ChatGPT, in the information search field has enabled users to quickly gather and analyze data, in private, educational and business environments.

As a data analytics company, Ticon was for quite some time immersed in machine learning and artificial intelligence applications to data analytics, and we shared our experience with this hot topic in a blog, published over a year ago.

While we apply AI technologies in our business, and admittedly, use ChatGPT as a valuable assistant in refining our written content, LLM application in automation of market research, competitive analysis, and even market trend forecasting is still questionable. Unfortunately, the accuracy of the data provided by AI models currently available is a key concern, especially when users rely on it for business decisions.

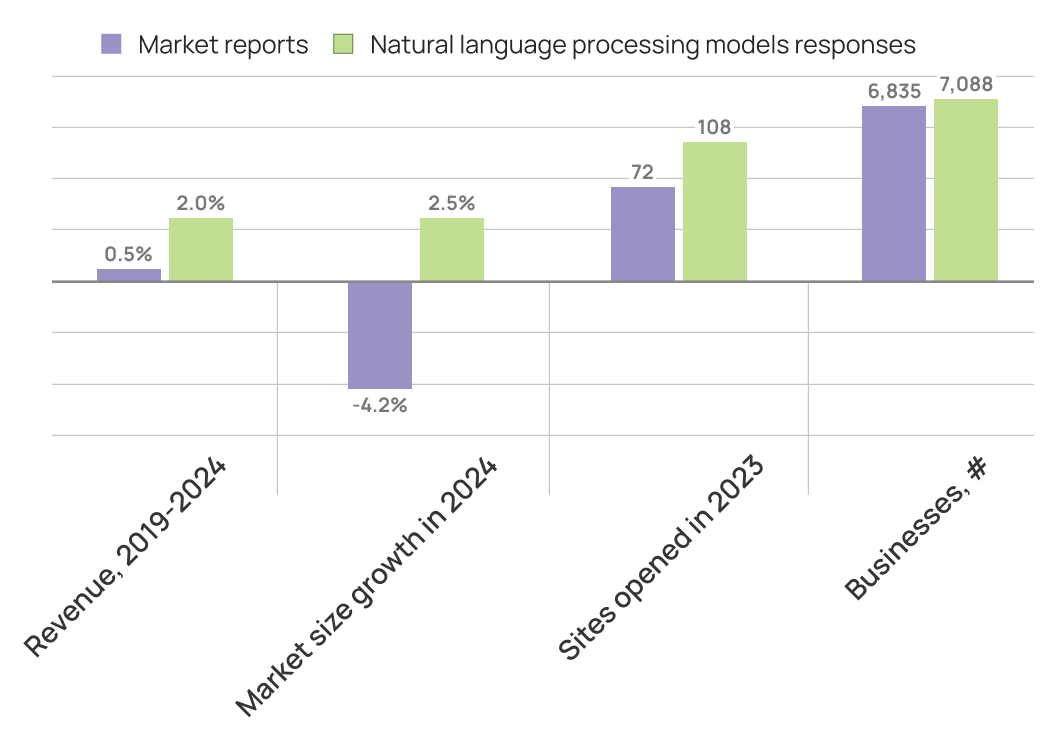

Rather than disparaging AI without justification - which is as much of a mistake - and accepting all of its results without thorough verification - we conducted a study that evaluates the reliability of ChatGPT-generated data analysis on the Australian retail market, focusing specifically on convenience stores. Through a comparative analysis of AI-generated content and verified sources, our study highlights the discrepancies, and examines how closely the data generated by natural language processing models aligns with reality.

To assess the accuracy of ChatGPT-generated data, we conducted a series of identical queries focused on the market prospects for the selected niche and compared the responses to actual market data from industry reports1. The focus of the comparison included market size, trends, competition, and the general landscape of the retail sector in the selected country.

The information provided by the language processing model showed significant deviations from the real data.

It is interesting to note that answers from ChatGPT on simplest questions – number of businesses – demonstrate less error (3.7%) than the others, though also not very sophisticated as well (the response regarding market size growth rate shows a 166% error, and even a different direction of the trend).

We do not aim to determine why ChatGPT provided inaccurate responses. Moreover, we acknowledge that rephrasing questions might yield different answers that could be more or less precise. However, rather than placing ourselves in the meta-rules, a well-known situation in physics where "there are rules for choosing a solution, but no rules for choosing the rules themselves," we would like to underscore the importance of verifying AI-generated information against credible sources, especially when researching niche markets in specific regions, or conducting any other studies which result can influence important decisions, money making or ROI.

References:

Convenience Stores in Australia - Market Research Report (2014-2029)